eSmart COHR

Cyber Safety

Protecting Our Children - Key Digital Rights News ( 25th Sept 2024)

September was a huge month for children’s digital rights and online safety – but if you blinked, you might have missed it. We’ve put together this rundown of the major developments to catch you up on what’s been happening.

Around the middle of the month, we saw four announcements in quick succession. The news came from government, at both the state and national levels, and from social media companies.

Social media age restrictions legislated in South Australia

The first announcement came on 9 September. The South Australian Government announced it planned to enact legislation compelling social media companies – like Meta and X – to enforce age restrictions on their platforms.

At the Alannah & Madeline Foundation, we acknowledged the intention behind this move, but we weren’t without reservations. We support any steps to make tech companies accountable for how children use their platforms. However, we’re also clear-eyed about the fact that age restrictions alone aren't the answer. A multipronged approach from government, tech companies and everyone in the community is needed to make the digital world safer for children.

Social media age restrictions announced at the federal level

Just one day after South Australia made its announcement, the Australian Government came out with plans to enact the same kind of legislation at the national level. Once again, we acknowledged the move as a sign the government was taking children’s wellbeing in the digital space seriously. But we also cautioned that age restrictions wouldn’t address the underlying issues that make social media platforms unsafe for children and young people.

Australia to implement Children’s Online Privacy Code

The third big piece of news and the most encouraging policy development came on 12 September, when the Australian Government announced it would create a Children’s Online Privacy Code. This was a truly significant development, and something the Alannah & Madeline Foundation has been advocating for some time.

Unlike the proposed age restrictions discussed earlier, this piece of legislation gets to some of the root issues. In particular, it addresses the intrusive practices that children are subject to on social media.

Tech companies collect users’ personal information, including children’s, which is then used to generate marketing revenue. This is done with little consideration for users’ privacy and wellbeing, and the companies in question have been shown to manipulate children’s online behaviour and exploit their vulnerabilities. A Children’s Online Privacy Code would greatly restrict these practices.

Meta announces new ‘teen accounts’ for Instagram

The fourth and final revelation was delivered by social media giant Meta on 18 September. Instagram users under 16 will now be moved to ‘teen accounts,’ with the intention of allowing parents greater control over their children’s social media usage.

This announcement signals positive intent on the part of Meta. Previously, the company has tended to push responsibility for what children do on its platforms onto parents and children themselves. Although it won’t fix the whole problem, Meta’s taking accountability for the potential harms children and young people can fall prey to while using these products is a step in the right direction.

More news around the corner...

All of these changes are happening in response to increased visibility of online safety issues and calls for action from a concerned community. The fact that both government and tech companies are responding is proof that the current, largely unregulated model is unsustainable, and those in power are beginning to take notice and act accordingly.

But the fight for children’s digital rights is far from over. Whether these measures improve digital safety or fall flat will depend on how they are implemented. For now, we are cautiously optimistic and watching with interest.

Response to Meta's new Instagram ‘teen accounts’: signals some positive intent (19th Sept 2024)

Meta has announced it will introduce ‘teen accounts’ on its Instagram platform, signalling some positive intent to help make its platform safe by design for children and young people.

The move comes after many weeks of community discourse about the issue of age limits for social access, and the Australian Government’s announcement last week of the development of the Online Children’s Privacy Code.

Meta says the new ‘teen accounts’ will be set to private by default and include age-inappropriate content restrictions.

CEO of the Alannah & Madeline Foundation said this is a positive step in the right direction from the social media giant.

“We are pleased to see Meta playing a more active role in addressing the risks faced by children online,” said Ms Davies.

“For too long, responsibility for children's safety online was pushed back onto parents, schools and children themselves.

“Meta’s proposed changes aim to tackle critical issues surrounding contact, content, conduct, and compulsion on their platforms through default settings for teens under 16.

“This goes some way to building in safety by design and ensuring that children’s privacy and safety online are put ahead of commercial interests,” added Ms Davies.

The Alannah & Madeline Foundation continues to advocate for a collective approach that recognises the critical role government, tech companies, and community play in making our online world safe for children to enjoy.

“We look forward to seeing more details on Meta’s age assurance strategy,” said Ms Davies.

“Key questions remain about how the company will identify children on its platforms and manage their personal information, including sensitive data like biometrics.“As age assurance technologies evolve, it’s crucial that these measures don’t inadvertently introduce new risks, such as data misuse or increased marketing targeting.”

Ultimately, Australia needs clear, enforceable national regulation about what all digital platforms that children use do with children's personal information, with a focus on upholding the rights of the child.

The Foundation supports the Australian Government’s establishment of the Children’s Online Privacy Code, spearheaded by the Privacy Commissioner. This code has the potential to transform the digital landscape, ensuring a safer online environment for all children in Australia.

Keeping children safe online

Keeping children safe online requires collective action from governments, tech companies, and regulators to create a safer, more responsible digital environment that prioritises children's safety and well-being over commercial interests.

Multi-pronged approach to keep children safe online

- We believe it’s government’s responsibility to ensure children’s digital rights are upheld and realised – setting minimum standards based on community expectations and holding tech companies to account for not meeting these standards.

- It’s tech companies’ responsibility to prevent their services and products from being used in ways that violate children’s rights and which expose children to harms while using their services and platforms.

- And it’s up to the rest of us to take responsibility to upskill and educate ourselves and our children on how to navigate tech and the online world safely and confidently; to participate with them in their digital worlds, not just police them.

A broader safety net to address the underlying causal factors must include:

- Default Privacy & Safety Settings: all digital products and services must automatically provide the highest level of privacy and safety settings for users under 18 years, by default.

- Data: data is the currency for many tech services and products, but we must ban the commercial harvesting / collection / scraping / sale / exchange of children’s data.

- Privacy: prohibit behavioural, demographic and bio tracking and profiling of children, and therefore no profiled, commercial advertising to children under 18.

- Recommender Systems (the algorithm): ban the use of recommender systems – software that suggests products, services, content or contacts to users based on their preferences, behaviour, and similar patterns seen in other users.

- Reporting Mechanisms: require child-friendly, age-appropriate reporting mechanisms and immediate access to expert help on all platforms.

- Independent Regulation: remove self and co-regulation by the tech industry and establish a fully independent regulator with the power and resources to enforce compliance.

- Safety by Design: implement age-appropriate and safety-by-design requirements for all digital services accessed by children.

- Public Data Access: ensure public access to data and analytics for regulatory, academic, and research purposes.

Together, we can make a meaningful difference and gift children a digital world where they are free to live, learn and play safely. Read more about our Advocacy work related to the digital rights of children.

Response to Australian Government’s announcement today about national legislation for social media access (11 Oct 2024)

The Alannah & Madeline Foundation welcomes the Australian Government’s announcement today that its proposed national legislation for social media access will put the onus on tech platforms – and crucially not push the responsibility back to parents.

This is a win for children and parents and represents a key pillar of the Foundation’s ongoing advocacy to ensure children’s safety online.

The proposed legislation also recognises that social media platforms must exercise social responsibility and ensure fundamental protections are in place for children and young people.

For too long, responsibility for managing online risk has been pushed back onto parents, teachers, and to children themselves – an unacceptable, unreasonable, and unfair approach.

We are pleased to see positive steps being made by the government to prioritise the safety of children online.

We look forward to reviewing the legislation in more detail, once it is released in full.

Our hope is that any adoption of age limits, and their associated assurance measures, prioritise children’s rights and adhere to the highest standard of safety, security, privacy, and accountability.

The Alannah & Madeline Foundation will continue to advocate for the right of all children and young people to be safe in all places where they live, learn and play – including in online spaces.

Read more about children’s digital rights and our recommendations on social media age restrictions and online safety here.

CyberSafety @ COHR with Inform & Empower

Our students will once again be participating in Cyber Safety webinars each term with Marty from Inform & Empower. Over the next two weeks, all Year 1 to 6 students will join in live to listen, discuss and reflect on different topics to help them all continue to become positive digital citizens. As well as these webinars, students have also visited The Loft with their class and completed a lesson with a Cyer Safety focus. Preps will have lessons and resources shared with them as well.

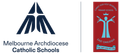

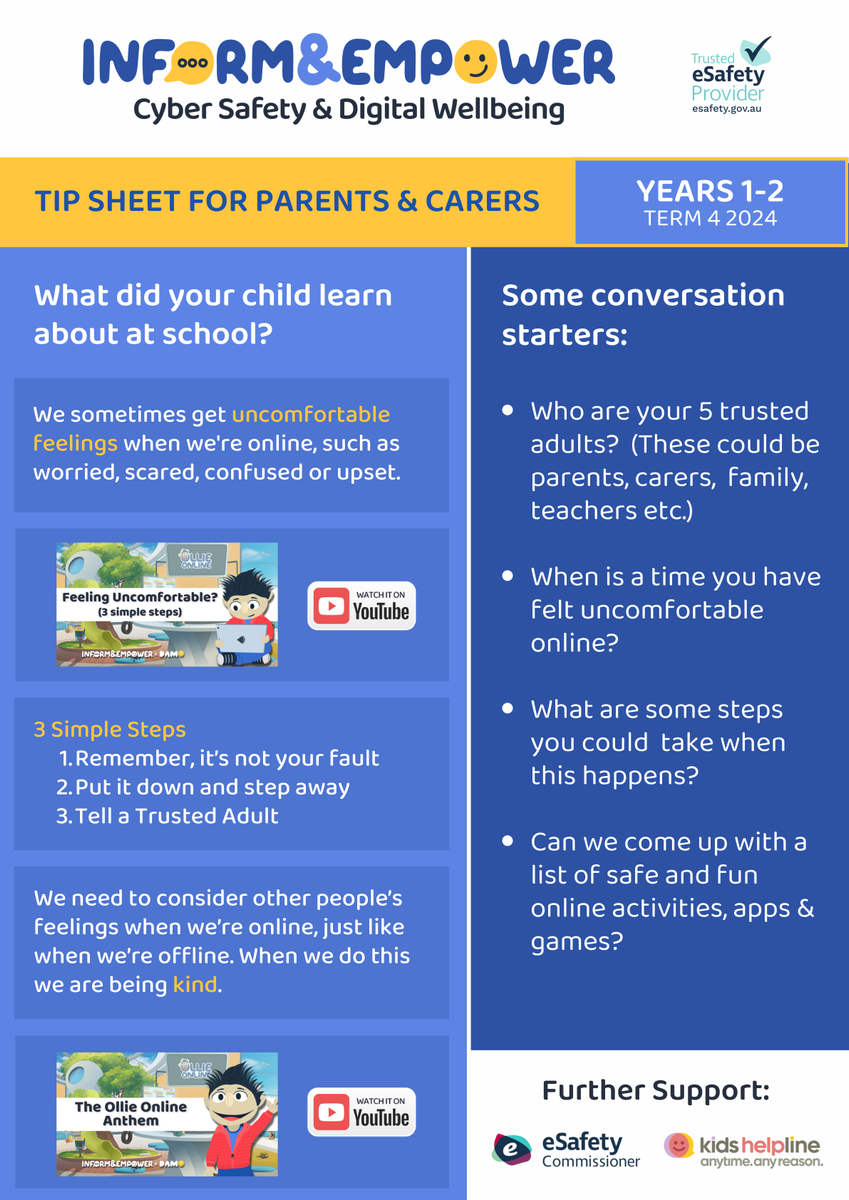

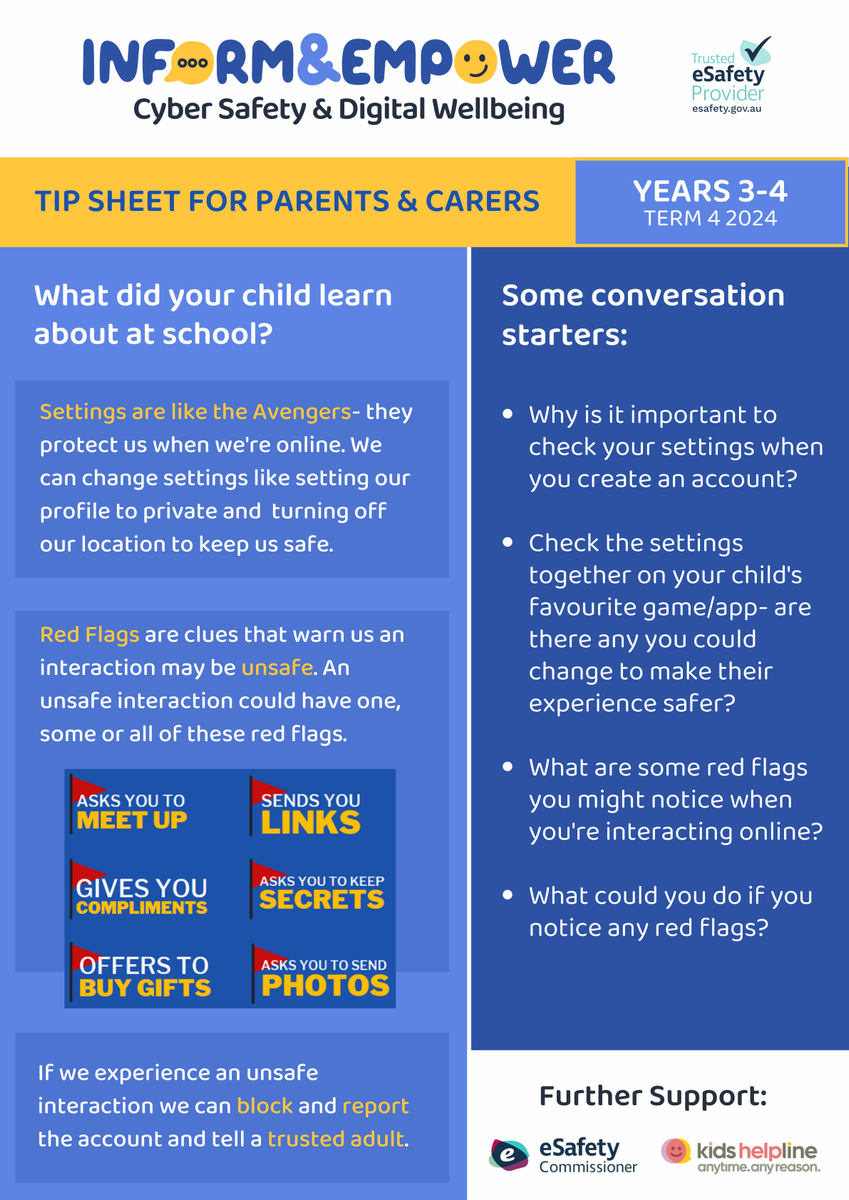

Below is the focus for each level's session as well as 'tip sheets' for you to continue the conversation at home.

P-2 focus: Online Interactions

Help seeking strategies, navigating uncomfortable interactions, early warning signs

3/4 focus: Being Safe & Secure

Settings, navigating unsafe interactions, red flags

5/6 focus: Critical Thinking

Navigating unsafe interactions, red flags, online influencers, AI

Whilst the live sessions happen in the classroom, it is essential that parents are continuing the conversations at home.

Inform & Empower have designed a simple "tip sheet" for parents/carers that gives an overview of the session and some conversation starters.

Parent Tip Sheets...